RL Examples

![]()

- Fly stunt manoeuvres in a helicopter – you control the helicopter and make manoeuvres. There is a positive reward for following the desired trajectory or when the helicopter comes within a radius of where we want it to be and a large negative reward for crashing so that it learns that it is bad to crash.

- Build a machine to defeat the world champion at the game of chess – positive/negative reward for winning/losing a game; at the end of the game, it knows if it has won or lost. The agent will figure out for itself how to optimize the rewards and make decisions along the way to maximize the rewards at the end of the game.

- Manage investment portfolio where investment agent is getting data at real-time and decides where to invest – in which financial product such that the reward (money) is maximum. There is a positive reward for each Rupee in the bank.

- Make a humanoid robot walk – a positive reward for forwarding motion and a negative reward for falling over. To make it walk across the room, you check if it is making progress at each step and you don’t want it to fall over. At each step, there is a reward and it needs to figure out to walk across the room based on that.

- Play Atari games better than humans.

RL Framework

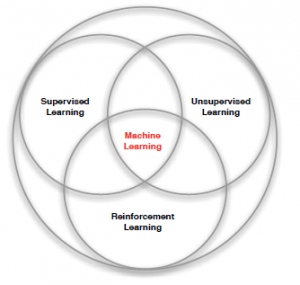

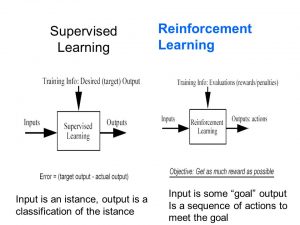

Supervised Learning - Input is an instance, the output is a. classification of the instance.

Reinforcement Learning - Input is some 'goal', the output is a sequence of actions. meet the goal.

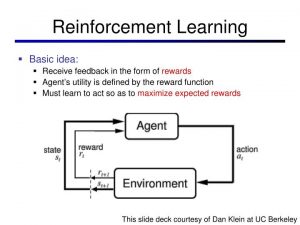

Reinforcement learning is based on the reward hypothesis:

All goals can be described by the maximization of the expected cumulative reward.

A reward Rt is a scalar feedback signal which indicates how well the agent is doing at step t and the agent’s job is to maximize the cumulative reward.

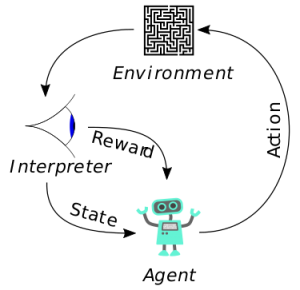

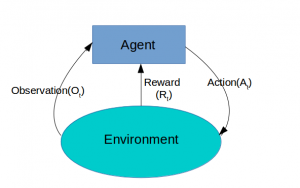

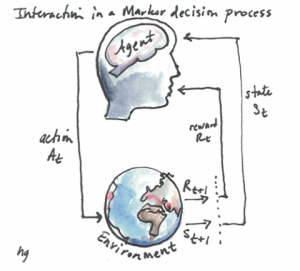

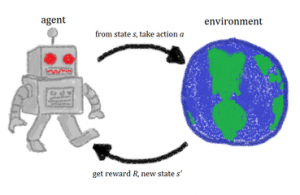

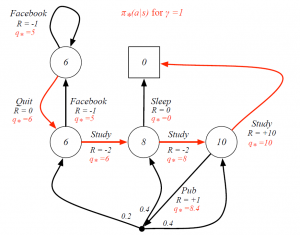

We use the below RL framework to solve the RL problems. RL problem is one of sequential decision making with the goal to select actions to maximize total future reward. So we have to think ahead because actions may have long term consequences, rewards may be delayed and sometimes you may have to sacrifice immediate rewards to gain more long term rewards.

For example, a financial investment may take months to mature so you are losing money now but in the end you may get more money; for refuelling a helicopter you have to stop your manoeuvring thereby lose rewards but it might prevent a crash in several hours; in a chess game you make a move to block the opponent which might help to improve winning chances many many moves from now…

Markov Property

The future is independent of the past given the present

H1:t à St à Ht+1:∞

Once the state is known the history may be thrown away.

![]()

The state is sufficient statistic of the future. So, what it says is that if you have the Markov Property then the future is independent of the past given the present. In other words if you have got the state representation St and it is Markov then you can throw away rest of the history as it will not give you any more information of what will happen in the future than the state you have because that state characterizes everything about the future – all future observations, actions and rewards.

St is more compact to work with than entire history.

The distribution of events that might happen to us conditioned on the current state is the same as conditioned on the entire state. So if we make decisions based on St they will still be optimal because we have not thrown away information.

For example, in case of helicopter manoeuvring case, if it is Markov, then its current position & velocity, wind direction can help us determine what will be its next position – we don’t need to know what its position was 10 minutes ago. You don’t need to remember the history of its previous trajectory because it is irrelevant.

Now, in contrast, if you took the imperfect state representation, that is non-Markov – like you have the position of the helicopter but not the velocity, in such case where the helicopter is now will not determine where it will be next because you don’t know how fast it is moving. You will have to look back at history to figure out what its momentum is and that sort of other information.

So we always have to come up with a Markov state to solve the RL problem and our job is to find the State representation that is useful and effective in predicting what happens next.

Discrete-time stochastic control process

MDP is a discrete-time stochastic control process.

It provides a mathematical framework for modelling decision making in situations where outcomes are partly random and partly under the control of a decision maker.

Markovian Property of MDP: Only the Present Matters

- It is not related to any of the states the agent visited previously

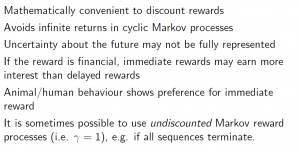

- The solution to the MDP problem is policy (π)

- An Optimal Policy (π*) maximizes long term cumulative reward problem

At each time step, the process is in some state s, and the decision maker may choose any action a that is available in state s. The process responds at the next time step by randomly moving into a new state s', and giving the decision maker a corresponding reward Ra(s,s').

The probability that the process moves into its new state s' is influenced by the chosen action. Specifically, it is given by the state transition function Pa(s,s'). Thus, the next state s' depends on the current state s and the decision maker's action a.

But given s and a, it is conditionally independent of all previous states and actions; in other words, the state transitions of an MDP satisfies the Markov property.

MDPs are useful for studying optimization problems solved via dynamic programming and reinforcement learning.

Markov Reward Process (MRP)

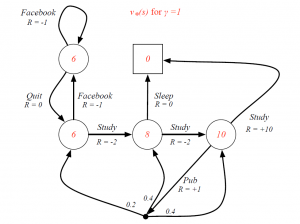

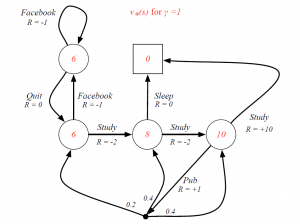

Optimal Value Function for Student MDP

Optimal Action-Value Function for Student MDP

Optimal Policy For Student MDP

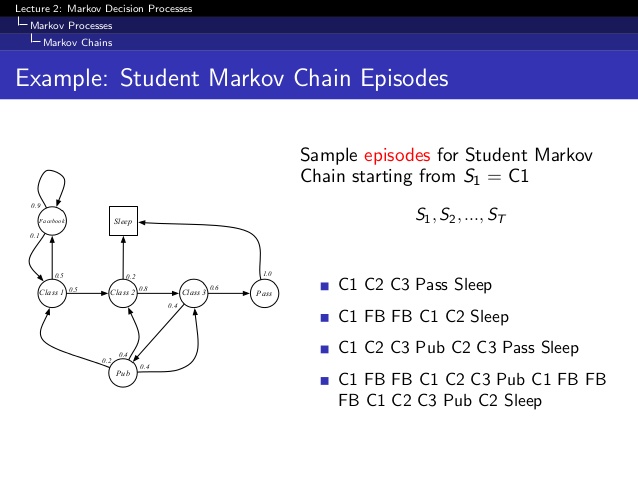

Markov Process or Markov Chain

A Markov Process is a memory-less random process, that is, a sequence of random states S1,S2,… with the Markov Property. It is represented as a tuple < S, P > where S is a finite set of states and P is the transition probability matrix.

Pss’ = P[ St+1=s’|St=s]

Markov Reward Process

A Markov Reward Process is a Markov chain with values. It is represented as a tuple < S, P, R, γ >, where R is the reward function and γ is a discount factor

Rs = E[ Rt+1 | St=s ]

γ ϵ [ 0,1 ]

So, MRP is an MP with some value judgment to decide how good it is to be in some particular state. R tells us how much reward do we get from that state at that moment and discount factor is used to calculate the accumulated reward for the sample sequence of states with rewards discounted over the sequence.

Reward is the immediate value we get for being in a particular state and our objective is to maximize the accumulated reward.

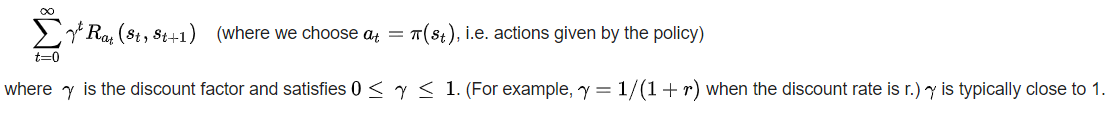

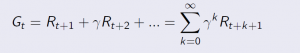

Return

The return Gt is the total discounted reward from time-stamp t

![]()

![]()

Our goal is to maximize the Gt that is the sum of rewards overall time-stamps into the future. But we’re going to discount the rewards by the discount factor γ for each time-stamp infinitely into the future.

γ is the present value of future rewards.

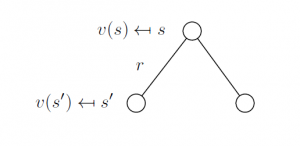

Value function V(s)

The value function v(s) gives the long-term value of state s

For an MRP v(s) is the expected return starting from state s

v(s) = E [ Gt | St = s ]

Gt = Rt+1 + γRt+2 + γ2Rt+3+…

It shows how good it is to be in a particular state, how much reward can we expect to get if we take a particular action to estimate how well are we doing in a particular state. It is the prediction of the future reward used to evaluate the goodness/badness of states to select between actions that give the maximum reward.

![]()

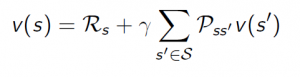

Bellman Equation for MRPs

The value function can be decomposed into two parts:

- immediate reward Rt+1

- discounted value of successor state γ*v(St+1)

v(s) = E [ Gt | St = s ]

# expanding Gt

v(s) = E[ Rt+1 + γRt+2 + γ2Rt+3+… | St=s ]

v(s) = E[ Rt+1 + γ(Rt+2 + γRt+3 + γ2Rt+4 + …) | St=s ]

v(s) = E[ Rt+1 + γGt+1 | St=s ]

v(s) = E[ Rt+1 + γv(St+1) | St=s ]

Rt+1 – is immediate reward for being in state s, i.e. Rs

v(St+1) – value for being in new state s’, which is the probability of transitioning to that sate multiplied by the rewards for being in that state

Therefore,

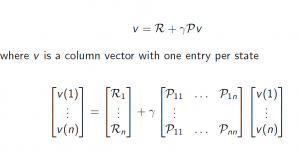

The above Bellman equation can be expressed concisely using matrices

Model-based Vs Model-free Agents

Model

It is the agent’s representation of the environment, what the agent thinks how the environment works.

A model predicts what the environment will do next.

Transitions P – predicts the next state

Pss’ = P[ S’=s’|S=s, A=a ]

Reward R – predicts the next immediate reward

Rs = E[ R| S=s, A=a ]

These three pieces, policy, value function and model – all may or may not be used.

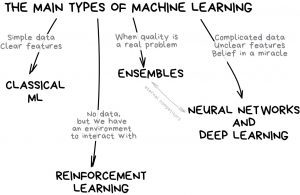

Value-based RL agents use only value function, no policy (implicit).

Policy-based use a policy and no value function.

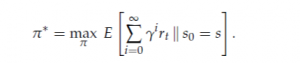

Model-Free use policy and/or value function but no model. We don’t try to understand the environment – how the environment works. Using Q-learning.

Model-based use policy and/or value function.

See Model-free GridWorld Example and Model-free GridWorld Example.

What is Dynamic Programming?

It is a method of solving problems by breaking them down into sub-problems. So by solving the sub-problems, you can combine the solutions to address the complete problem.

Dynamic Programming is a very general solution method for problems which have two properties:

Optimal sub-structure – Principle of optimality applies, the optimal solution can be decomposed into sub-problems.

Overlapping sub-problems – Sub-problems recur many times. Solutions can be cached and reused.

Markov decision processes satisfy both properties – Bellman equation gives recursive decomposition and Value function stores and reuses solutions

Planning by Dynamic Programming

Dynamic programming assumes full knowledge of the MDP. It is used for planning in an MDP.

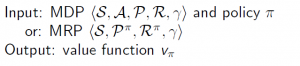

The prediction method is to evaluate the future given a policy.

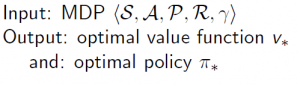

The control method is to optimize the future by finding the best policy.

For prediction:

Or for Control:

Batch Reinforcement Learning

(Source: Reinforcement Learning in R, by Nicolas Pröllochs, Stefan Feuerriegel)

Reinforcement learning generally maximizes the sum of expected rewards in an agent-environment loop. However, this setup often needs many interactions until convergence to an optimal policy. As a result, the approach is hardly feasible in complex, real-world scenarios.

A suitable remedy comes in the form of so-called batch reinforcement learning, which drastically reduces the computation time in most settings.

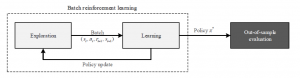

![]()

How Batch Learning saves time?

Different from the previous setup, the agent no longer interacts with the environment by processing single state-action pairs for which it updates the Q-table on an individual basis.

Instead, it directly receives a set of tuples (si, ai, ri+1, si+1) sampled from the environment. After processing this batch, it updates the Q-table, as well as the policy. This procedure commonly results in greater efficiency and stability of the learning process, since the noise attached to individual explorations cancels out as one combines several explorations in a batch.

Batch Reinforcement Learning

The batch process is grouped into three phases.

- In the first phase, the learner explores the environment by collecting transitions with an arbitrary sampling strategy, e.g. using a purely random policy or ε-greedy. These data points can be, for example, collected from a running system without the need for direct interaction.

- In a second step, the stored training examples then allow the

agent to learn a state-action function, e.g. via Q-learning. The result of the learning step is the best possible policy for every state transition in the input data. - In the final step, the learned policy can be applied to the system. Here the policy remains fixed and is no longer improved.

Conclusion

(Source: Reinforcement Learning in R, by Nicolas Pröllochs, Stefan Feuerriegel)

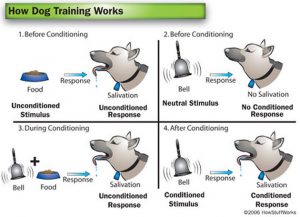

Reinforcement learning has gained considerable traction as it mines real experiences with the help of trial-and-error learning.

In this sense, it attempts to imitate the fundamental method used by humans to learn optimal behaviour without the need for an explicit model of the environment.

![]()

Reinforcement Learning with R programming

In contrast to many other approaches from the domain of machine learning, reinforcement learning solves sequential decision-making problems of arbitrary length and can be used to learn optimal strategies in many applications such as robotics and game playing.

Implementing reinforcement learning is programmatically challenging since it relies on continuous interactions between an agent and its environment. To remedy this, the ReinforcementLearning package for R, allows an agent to learn from states, actions and rewards.

The result of the learning process is a policy that defines the best possible action in each state. The package is highly flexible and incorporates a variety of learning parameters, as well as substantial performance improvements such as experience replay.

See:

R ReinforcementLearning Package for Model-free MDP

R MDPtoolbox Package for Model-based MDP